DIGITAL ELECTRONIC

In recent years, DevSecOps has risen in popularity to an extraordinary extent. It has changed the way in which developers approach security and creating code for applications. It has led to projects being secured from start to finish and has increased productivity among developers.

This post covers some of the reasons why DevSecOps has become so popular. It could give you a better indication about the benefits of incorporating it if you aren’t already, as well as why developers may prefer a DevSecOps approach to working on projects.

What is DevSecOps?

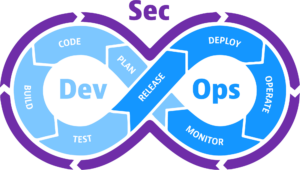

DevSecOps stands for Development, Security, a nd Operations. It involves automating the process of implementing security throughout every stage of software development. This means that security is taken seriously from the beginning design stages, all the way through to the deployment and delivery stages.

nd Operations. It involves automating the process of implementing security throughout every stage of software development. This means that security is taken seriously from the beginning design stages, all the way through to the deployment and delivery stages.

This approach to developing and deploying software has helped organizations keep their applications safe. It has also enabled developers to work more productively.

Nowadays, companies are looking to deploy new software more regularly, which created more pressure on developers to work faster. This caused security to become an afterthought and too many vulnerabilities were being exploited by hackers.

There are several important reasons why DevSecOps has become so popular. So, let’s take a look at what some of these reasons are below.

Better Integration

One of the biggest benefits to DevSecOps is how it can be so easily integrated. As a result, organizations have an easier time implementing DevSecOps and keeping their security risks to a minimum.

Development teams are also able to work more productively due to how easy DevSecOps is to integrate. This can help them create more secure code faster. Using DevSecOps helps companies complete various tasks across various teams so that they can collaborate better with each other.

Initially, it may seem challenging to make the move to a DevSecOps approach. However, most organizations find that the benefits of doing so weigh out the challenges involved with making the move.

Nowadays, developers are used to working with a DevSecOps approach as a standard way to develop code.

The Cloud

The introduction and widespread use of the cloud has been a big factor in why DevSecOps is so popular. When it comes to managing and developing software within cloud environments, you’ll find that developers are now accustomed to using the cloud.

Developers often prefer developing and managing projects in the cloud due to how it’s more transparent and comes with many services that can be integrated easily with it. In the beginning days of the cloud, developers were wary of how difficult it would be to transition to a cloud service whilst keeping it all secure.

However, the cloud is now used so commonly that developers have secure ways of creating code and releasing applications. DevSecOps is one of the approaches to using the cloud that developers have been liking.

Microservices

Microservices are among one of the more technical elements that have led to developers using DevSecOps more. The change to DevSecOps means ditching large service procedures to smaller elements.

Containers are the components that handle deploying applications and these are geared towards microservices rather than larger servers that are centralized. As long as developers and security teams practice good security habits whilst using DevSecOps measures, they can work more efficiently.

Kubernetes

The popularity of using containers within cloud environments has led to further developments in DevSecOps and caused more organizations to implement Kubernetes.

Kubernetes are the elements that create the base for providing developers with more control over how they develop and deploy applications with containers. It’s common for DevOps teams to let newbies in their teams make bigger alterations to software than before due to how the software is within a container that implements DevSecOps processes.

As a result, it’s always being monitored and teams are provided with notifications of any anomalies that require their attention. As a result, teams can find problems, fix them, and move on much quicker.

Malware

With more organizations making the transition to cloud environments and microservices, DevOps teams have been provided with more freedom when managing and developing software.

Whilst developers can work more efficiently, this transition has also led to increases in security threats. One of the main ones being malware. APTs are commonly used to launch malware into software development lifecycles.

Some companies find that malware can be sent into their software without them being able to properly detect it. This is commonly the case when it comes to using third-party software. Other types of malware have the ability to steal data that is being transferred within your cloud environments.

A DevSecOps approach mitigates these problems as it involves making sure that the process of transferring data and securing software from malware is carefully planned. This brings security teams and development teams to work together as one to boost security and work productively.

DevSecOps only works if security is made to be a high priority from the very beginning of a development lifecycle. Teams work on the assumption that every piece of software they work on contains malware.

This enables them to take the correct steps to discover evidence of malware and coming up with remediation processes to remove the malware before continuing with development.

Flexibility

DevSecOps allows developers to work with more flexibility. Having a higher level of flexibility whilst developing software creates a great infrastructure that large organizations have been implementing.

Teams can work together and organize large projects better and more people with different attributes can be added to projects that require their skills. Developers and security teams are now using DevSecOps to create ways to work with more flexibility whilst also remaining safe.

Conclusion

After reading through our post about why DevSecOps has become so popular, we hope that you’re able to put this information to good use within your own company. DevSecOps helps developers and security teams collaborate better with each other to ensure that your software remains more secure whilst still enabling developers to work quickly.

Be sure to consider the reasons as to why DevSecOps is so popular if you were hesitant about making the move yourself. There are many great benefits to a DevSecOps approach and your organization could be reaping the rewards.

The post What is DevSecOps? Why Is It So Popular? appeared first on The Crazy Programmer.

from The Crazy Programmer https://ift.tt/3zTRmsr