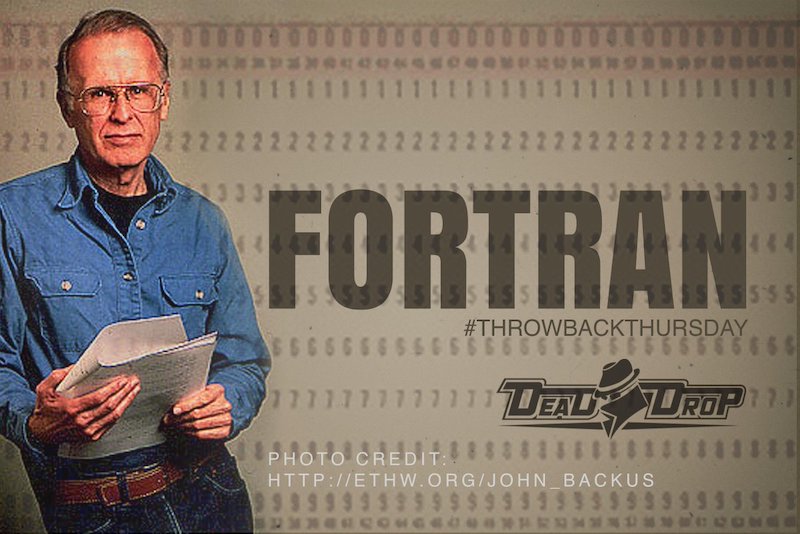

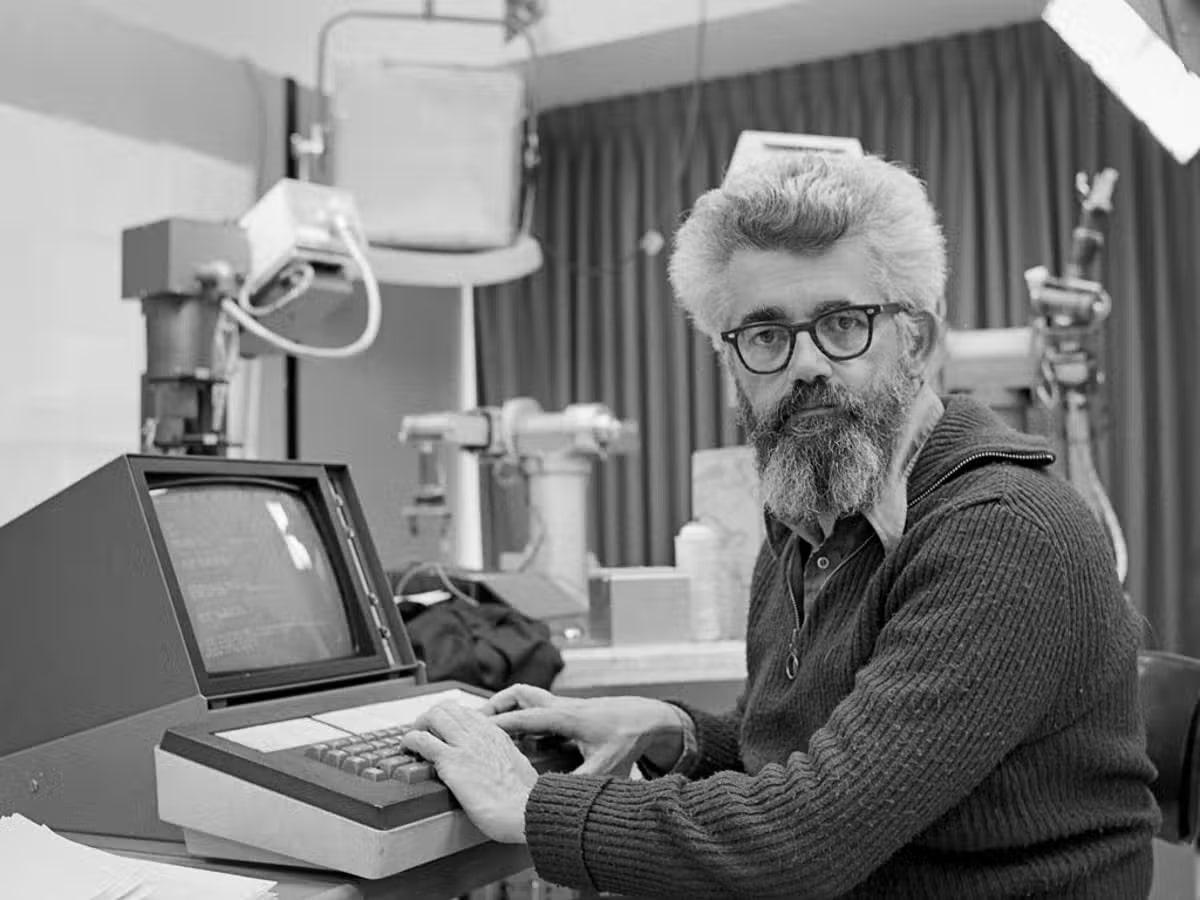

John Backus was a mathematician known for his invention of FORTRAN & for BNF notation for describing syntax for the programming language. John developed Formula Translator or Fortran, which was the first high-level programming language. It was a widely used programming language that made computers accessible and practical machines for developers and scientists without any need to have in-depth knowledge of this machinery.

Restless as a young man, John Backus found his place in mathematics, he earned B.S. in 1949 and M.A. in 1950 from the Columbia University of NY City. He later joined International Business Machines or IBM in 1950.

Drained of laborious coding by hand, he was permitted to assemble the team at IBM working on improving efficiency. His team at IBM developed the programming language FORTRAN for its numerical analysis. FORTRAN made programs that were quite good as ones written by professional developers.

Personal Life

John Backus was born on 3rd December 1924 in Philadelphia and stayed in Delaware for a long time. Backus’s parents were Elizabeth Warner Edsall and Cecil Franklin Backus. Backus was married two times, first to Jamison Marjorie, whom he got divorced in 1966, and later to Barbara Una in 1968. John had two children, Paula and Karen. In 2004 Barbara died, whereupon John shifted to Ashland, Oregon, to stay near Paula. John Backus died on 17th March 2007 in Ashland.

Education

His family sent him to Hill School located in Pennsylvania, though he wasn’t very studious, and got poor grades. Nevertheless, in 1942 he graduated and attended Virginia University. During John’s freshman year, he got expelled because of poor attendance & joined the US Army.

When in the US Army, Backus did really well on his medical skills and later went to Haverford College for studying medicine. In the meantime, he got treated for a brain tumor and he continued at Flower & Fifth Avenue Medical School located in NY, but after nine months, he dropped out. Not being very successful, he was quite unsure about his career and he rented an apartment in NY city and built hi-fi set. He was then enrolled at the radio school where he discovered his mathematics skills.

Soon, Backus got admission to Columbia University and completed his mathematics. In 1949, he got his degree, and in 1950 IBM hired John to work with the team on the (SSEC) Selective Sequence Electronic Calculator, which was the first electronic computer made by the company. For almost 3 years, he worked on this project. Before, part of his work was attending to the machine & fixing it when stopped running. Machine programming was tough since there was not any organized system to do. Backus invented the program he named Speedcoding for streamlining this process. This program included a “scaling factor” that allowed numbers of various sizes to be stored and manipulated easily.

Working at IBM

After his graduation, Backus joined IBM, staking territory in their upcoming field of computer science. Backus did not know much about computers (just some people did) soon he found himself on its cutting edge. He led the group of researchers in 1952 who produced the Speedcoding system for IBM 701 computer, and after one he wrote what will prove to be the historic memo. In this, he outlined, Cuthbert Hurd, a need for general-purpose and high-level programming language. It was the origin of FORTRAN.

IBM approved John’s outline for the software language for 704 in 1953. With a team of professional developers, mathematicians and programmers, he together designed the language and translator.

John felt a translator will be important in making the machine faster and easier to work. It does not involve time-consuming and tedious hand-coding, which was quite a typical programming. Fundamentally, the device could translate data given through language made for users to find in binary language that the computer could understand.

Career

FORTRAN was a prototype for the modern compilers—programs that could translate high-level language into a form that the computer hardware will be able to read. Before the foundation of FORTRAN, programmers were forced to endure logging in the rows of ones and zeroes, the binary language of the computers.

FORTRAN helped programmers with greater creativity and freedom and allowed wide-ranging developments—not least because, before its development, around three-quarters of the cost of running a computer came from programming and debugging. IBM 1954 published FORTRAN I, and in the following year Backus, with me. Ziller and R A. Nelson started working out bugs in their first version of the language.

In 1954, John published a paper with his teammate named “Preliminary Report, Specifications that were used for Mathematical FORmula TRANslating, FORTRAN.” Though to complete the language’s compiler provided, it took them 2 long years with every IBM 704 installation. After 50 years, Fortran now is widely used. With time and feedback from the user, this system became quite efficient and even early “bugs” are removed.

After working with the Fortran system, John continued with Backus-Naur Form, it was a kind of standard notation describing grammatical errors of high-level languages. It’s used in various programming languages at present. He continued working on language simplification and worked primarily for IBM’s Almaden Research Center and San Jose Research Laboratory. In 1963 he was named the first IBM Fellows.

Founding of FORTRAN

When John and his small team of IBM colleagues started their hunt for a programming system in 1954 that enabled computers to produce their own language programs, they were not always very sure what they will come up with. Backus remembered in 1967: when he started to solve a problem, it got split up into others we had not foreseen. In 1955, they had less than one year. But, they finally did in 1957.

What John and his workers had made was FORTRAN, known as the daddy of the programming systems. Many people, “think FORTRAN’s key contribution was enabling the programmer to write down programs in the algebraic formulas rather than machine language. However, it isn’t. FORTRAN can actually mechanize the organization of loops. The loop, highly used in scientific work and computing payrolls, is the series of instructions that were repeated many times until the specific result arrived.

FORTRAN did increase programmer creativity and productivity. What had earlier taken over 1,000 machine instructions can be written now in just 47 statements. As intended, many engineers and scientists learned to do their own programming. However, language was very slow, initially, in catching on. But, users just found this tough to believe that machines can write the most efficient program.

By 1958, over half of these machine instructions of 704 were getting generated by FORTRAN. This was soon used on various other machines too. “In the way, FORTRAN was a big boon to competitors, “because, with the programs tied up over machine language, IBM users were not about to reprogram another computer. However, if a competitor can come up with the program that will translate FORTRAN program in the language of his own machine, he had the selling point.”

Awards

The achievement won John Backus W. W. McDowell Award in 1967 for his outstanding contributions to the computer field from the Institute of Electrical & Electronics Engineers.

He received the National Medal of Science in 1975 for pioneering contributions to computer programming languages.

In 1976, John was awarded the National Medal of Science and the prestigious Turing Award. He was the 1993 recipient of the Charles Stark Draper Award. In 1991, he retired to Ashland, Oregon, where he died on 17th March 2007.

The post John Backus Biography appeared first on The Crazy Programmer.

from The Crazy Programmer https://ift.tt/TM0Pnw5